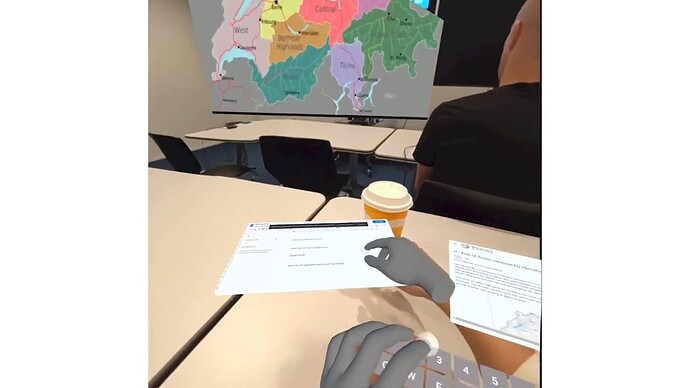

Mixed Reality is moving beyond controlled spaces like homes and offices, making it important for UI layouts to adapt to different real-world environments. SituationAdapt is a new system that adjusts UIs by taking into account environmental and social cues. It uses perception modules to detect surroundings, and reasoning with a Vision-and-Language Model to ensure UI elements are placed appropriately. An optimization module ensures smooth adaptation without blocking important cues. In a user study, SituationAdapt outperformed previous adaptive methods. What do you think about using AI for better UI management in Mixed Reality?

This is an exciting development! Adapting UI to the user’s real-world context would make MR much more practical in busy environments. Can’t wait to see this in action.

I always found static UIs in MR a bit frustrating, especially in social settings. Having the UI adapt dynamically sounds like a great improvement.

Exactly, it’s all about making MR more intuitive and less obtrusive, even in complex environments!