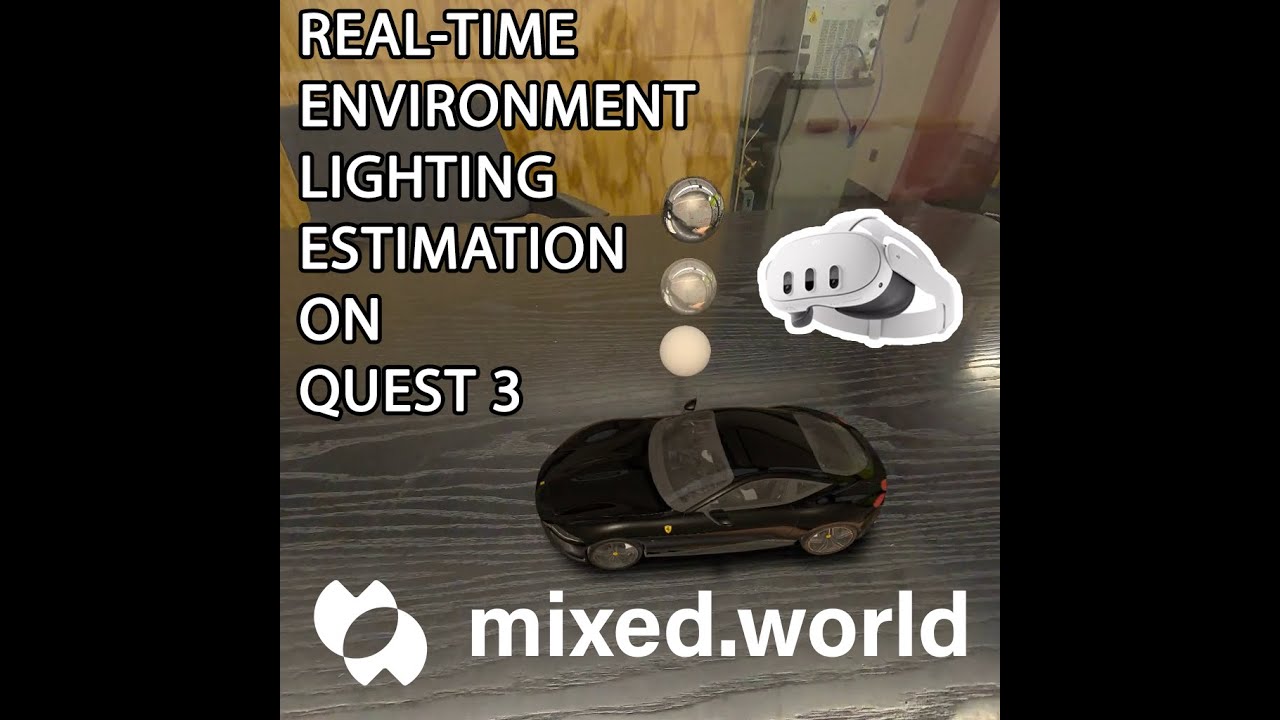

Saw a demo on the Meta Quest 3 where they’re using real-time lighting estimation with the Media Projection API… looks like it brings out realistic lighting for PBR materials in mixed reality. Has anyone here tried it yet?

I remember trying out light estimation in ARCore a while back. I used an environmental reflection probe and applied convolution to adjust the spherical harmonics. Curious how the Quest 3 compares!

Whoa, that sounds intense! Does the Quest 3 setup feel smoother than ARCore?

Haven’t tried it on Quest 3 yet, but it looks more advanced! Media Projection API is new territory for me.

This is wild! So if I’m understanding, this technique makes the lighting in the headset reflect the actual room lighting?

Yep, exactly! The camera reads the room’s lighting, and Unity can apply it in real-time on 3D models. Really makes a difference!

That’s crazy cool. Definitely gives it a realistic feel then!

How’s the performance impact? Real-time lighting sounds like it could be intense on the processor.

Good question! Quest 3 handles it decently, but it’s definitely something to monitor depending on the complexity of the scene.

Makes sense. I’ll be curious to see how it works in more detailed settings.

Does this mean you can use the lighting setup in any environment, like indoors and outdoors?

Exactly! It adapts to different lighting situations in real-time, which makes it flexible for mixed reality.

Perfect! That’s next-level immersion right there.

What’s PBR? Sorry, still new to this stuff!

PBR stands for Physically Based Rendering. It’s a technique to make materials look realistic by using lighting, reflection, etc.

Ahh, gotcha! Thanks for explaining!